- I might get your name wrong. To illustrate: long ago, for whatever reason, you might have signed up as, oh, “Patrick” but no one except for, say, your mother ever calls you that. At a minimum, addressing you incorrectly feels rude, and in some cases it can do real harm: years ago, for example, a mass email from Steyer inadvertently deadnamed a longtime Steyer talent, a hurtful error that I will always regret.

- I might be sending you content that I would never in a million years send to you 1:1 if I knew something about your current situation. For example, say you were just laid off as so many in the tech sector have been in recent months. In that moment, I would want to speak to you about your hugely transferable skills and the various companies including Steyer that are actually hiring right now. I would never intentionally compound your stress by blithely riffing—as I am about to do below—on the profound market disruptions still to come.

This decision—not to autopopulate my newsletters with subscriber names—is a small one that, I suspect, the vast majority of you (you, plural) don’t even care about. But it’s a human decision, one of many distinctly human decisions that I make when I write each installment of Workings. And THAT—the fact that there is actually a real person behind this content—is something that I think you, and most other people, DO care about.

This brings me to the short version of my answer to the question, “Will ChatGPT replace us all?”: No...not if we’re smart about it.

ChatGPT, one of many generative AI tools that produces original* content, is AMAZING. Like many of you, I’ve spent big chunks of the last 49 days checking it out. (The current version was released into the wild by OpenAI on November 30th, 2022.) While we can—and should!—laugh at some of what it produces, we should also marvel. ChatGPT is in its infancy, “learning” from every interaction, and even so: its baby steps include some moves that are pretty darn sophisticated in many ways. Bottom line: I think it would be insanely shortsighted to dismiss ChatGPT—or to suggest that generative AI more broadly won’t have a huge impact, on society and especially on those of us who produce content for a living. OF COURSE IT WILL.

That said, it’s not ‘Game Over’ for us mere mortals—and unless we fall asleep at the wheel—it need never be. Last I checked, we (i.e. humans) developed technology to help people. And it can—it really, REALLY can. That’s part of why I, a card-carrying softie—a romcom-loving lit major!—deeply appreciate the hard, cold world of technology. But, c’mon all you (granted, brilliant) engineers: we know where this can go if we’re not careful, right? We’ve all seen the movies. So, what does “careful” look like? Some ideas:

1. As an industry, we need to forget Meta’s (née Facebook’s) erstwhile motto “Move Fast and Break Things.” Some things—like democracy, the environment, mental health, and trust (interpersonal and institutional)—are too precious to treat so cavalierly. Repairs can be slow and difficult and are sometimes even impossible. So, from the get-go, we should think really hard not just about the benefits of new technologies but about potential adverse consequences. In the case of ChatGPT, for example, it’s critical to think through the impact on society of driving the cost of producing misinformation to essentially zero.

2. As a society, we need to fundamentally redesign our educational and employment systems. One of the inevitable (and most immediately painful) consequences of automation is unemployment. For all of human history, new tools have been displacing old ways of doing things, and jobs—and in many cases whole job categories—have gone away. This is only going to continue, and by all accounts, the pace of change is only going to increase. Moreover, there’s a vision (to which I mostly subscribe) in which this isn’t necessarily a bad thing: change can be revitalizing at every level—for individuals, families, organizations, communities, and countries. But in the absence of certain social safety nets (e.g. universal basic income, healthcare coverage that isn’t tied to employment, adequate job training, etc.), unemployment is too often devastating—not just to individuals but to families and in some cases whole communities. Now that the AI revolution is actually here, we need to do what we should have started decades ago: we need to re-think every phase of our educational system (including adult education, which will need to be never-ending), with a strong focus on developing our most human talents: creativity, critical thinking, communicating in abstractions, providing value-based judgments, demonstrating empathy, etc.

As for us content professionals:

We need to keep cultivating these same human talents mentioned above—while figuring out how best to incorporate the latest and greatest tools available to us. Here at Steyer, the new year has kicked off with a happy round of new business meetings, and it’s our most human capabilities—together with our technological sophistication—that our clients, new and old, are after. If you also make a living creating business content: integrate your most valuable human qualities with the best tools available to you, and I think, like us, you’ll do just fine. ::fingers crossed::

I’ll stop there for today. I’ll be chipping away at this topic—in various ways—for years to come.

Thanks for reading,

Kate

Note:

*I asterisked “original” because, for now anyway, all of these generative AI tools draw on content that was produced by actual human beings—raising a whole host of copyright/’fair use’ issues alongside the even more profound issue of cost-free misinformation that I highlighted.

An unrelated but sincere ask:

A former Steyer talent (Leith McCombs) is working pro bono to bring about more inclusive and equitable retirement policies in the tech sector. Currently, to qualify for full retirement benefits, many large companies require a length of uninterrupted service that is hard to achieve if one has certain health conditions and/or caregiving responsibilities. To help describe the scale and scope of the problem, Leith is gathering as much hard data as he can, so if you’ve ever left a role at a U.S. tech company, please consider filling out his survey. I can say this because I know Leith (his character and his tech skills): your privacy will be protected, and your contributions will be used for good and not evil.

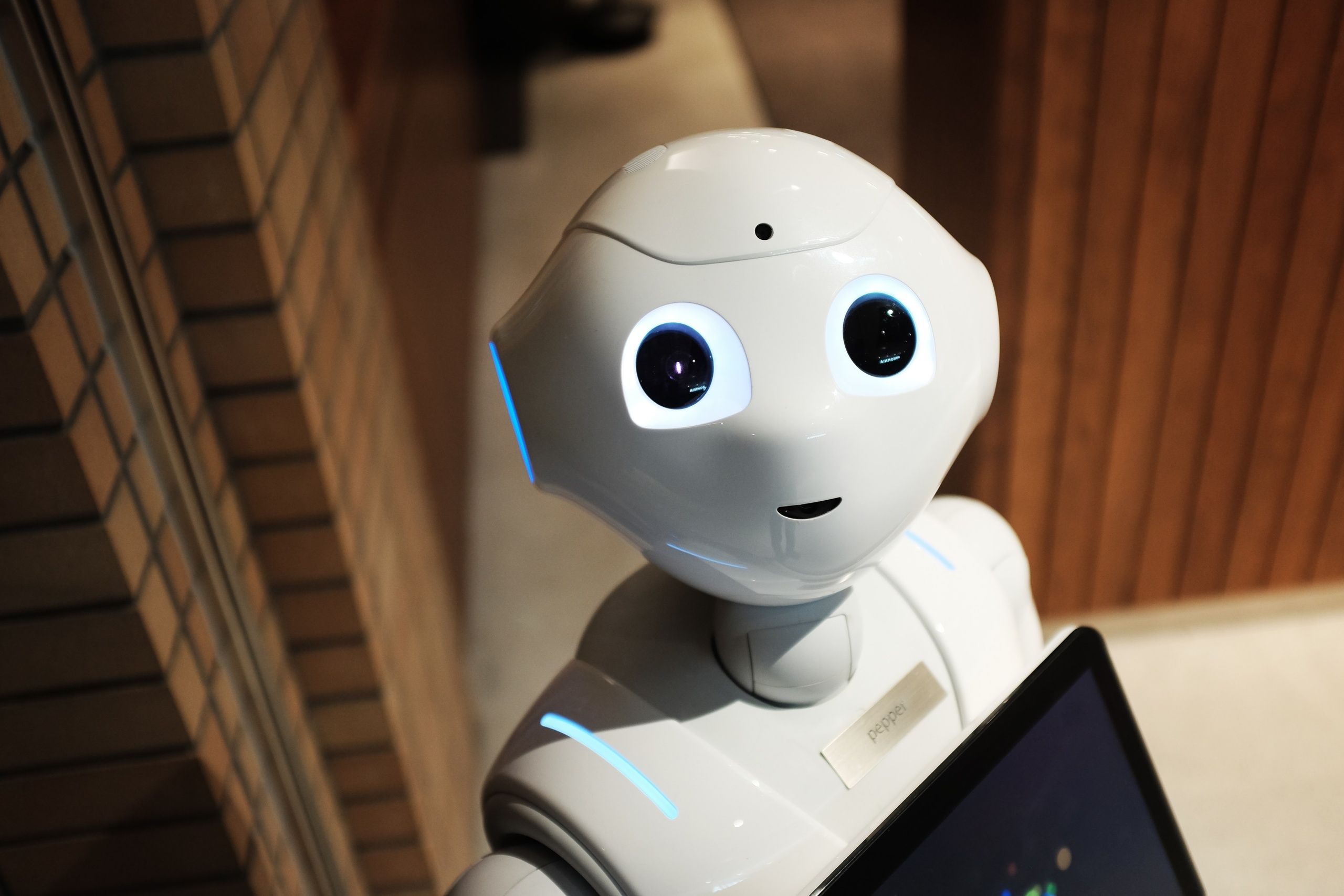

Photo by Alex Knight on Unsplash

Hello from Steyer!

Hello from Steyer!